Fashion-MNIST: Year In Review

It’s been one year since I released the Fashion-MNIST dataset in Aug. 2017. As I wrote in the README.md, Fashion-MNIST is intended to serve as a drop-in replacement for the original MNIST dataset, helping people to benchmark and understand machine learning algorithms. Over a year, I have seen a great deal of trends and developments in the machine learning field towards this direction. The dataset received much love from researchers, engineers, students and enthusiasts in the community.

On the web today, you can find thousands of discussions, code snippets, tutorials about Fashion-MNIST from all over the world. On Github, Fashion-MNIST has collected 4000 stars; it is referred in more than 400 repositories, 1000 commits and 7000 code snippets. On Google Scholar, more than 250 academic research papers conduct their experiments on Fashion-MNIST. It is even mentioned in the Science Magazine from AAAS. On Kaggle, it is one of the most popular datasets accompanied with more than 300 Kernels. All mainstream deep learning frameworks have native support for Fashion-MNIST. With just one line of import and you are ready to go.

Needless to say, Fashion-MNIST is a successful project. In this post, I will make a brief review of the important milestones it has achieved over a year.

Table of Content

Where it all starts

It was an ordinary summer day at Zalando Research. My boss Roland Vollgraf asked me to survey generative networks and implement one or two to get familiar with this topic. So I did it and tested my implementation on MNIST. But I got bored quickly: the task is too trivial on MNIST, as all generated images seem equally good disregarding the model complexity. Thus, I decide to make it more challenging by throwing random fashion images from our inhouse SKU database into the model. This of course requires a new input pipeline to load and transform the data properly, which I was too lazy to write. So I preprocessed those fashion images offline and stored them in the MNIST format. That’s the very first version of Fashion-MNIST.

While playing with this new toy, I kept improving its quality and later made it as an inner source project. Encouraged by my former colleagues Kashif Rasul and Lauri Apple, we finally decided to publish it on Github & arXiv. The rest of the story is probably known to you: the dataset was first discussed in reddit r/MachineLearning, then quickly spread its popularity over HackerNews, Github, Twitter, Facebook and other social networks. Few days later, even Yann LeCun himself posted Fashion-MNIST on his Facebook page:

I’d like to give special thanks to Lauri Apple, who encouraged and supported me during this journey. In the beginning, I was sometimes mocked for the idea of “replacing MNIST”, they don’t believe the community will accept it. Lauri stood out and told me that the people who actually make change are the ones who believe that change is possible.

In Academics

Today, as I write this post, 260 academic papers either cite or conduct their experiments on Fashion-MNIST according to Google Scholar. After some cleaning and quick read-through, I collected 247 of them and put into a spreadsheet. So who wrote those papers? What are their research problems? Where are they published? In the sequel, I will share those interesting statistics with you.

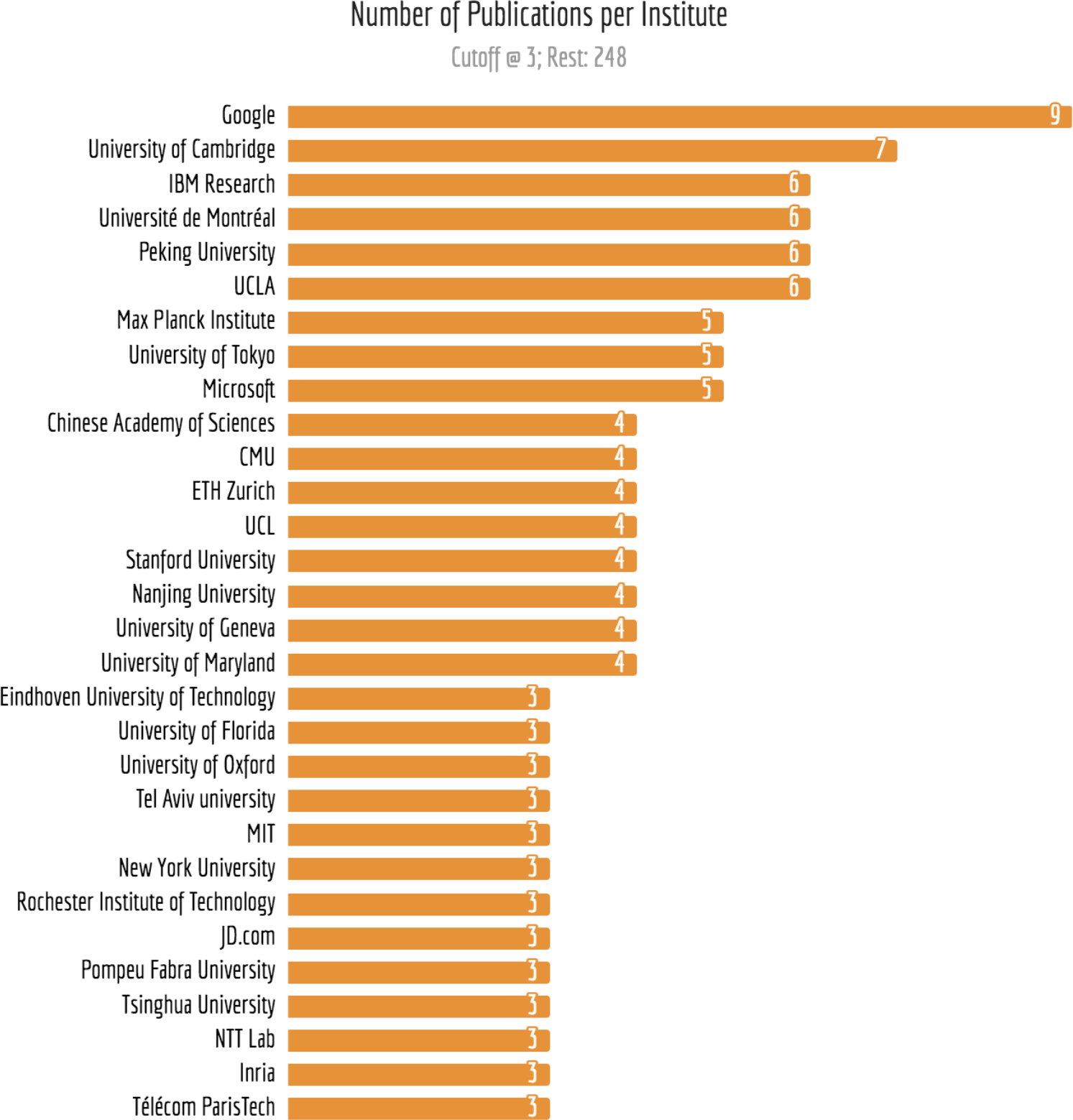

Top AI Institutes Love Fashion-MNIST

The figure below shows the number of publications per institute. Note, if one publication is co-authored by multiple institutes, I add one for each of them. Multiple authors from the same institute are counted only once for one paper. Institutes that have the same parent are grouped together, e.g. Google Research, Google Brain, Deep Mind are grouped into “Google”; Max Planck Institute of Informatics, Intelligent Systems and Quantum Optics are grouped into “Max Planck Institute”. For the sake of clarity, I made a cut-off at 3. The complete list of institutes can be found in here.

One can find all top AI institutes from North America, Asia and Europe in this list. Among them, Google is at the top with 9 unique publications using Fashion-MNIST in the experiment, followed by University of Cambridge with 7; IBM Research, Université de Montréal, Peking University and University of California Los Angeles with 6 publications, respectively. One can also find other tech companies from the full list, e.g. Facebook (2), Telefónica Research (2) Uber (1), Apple (1), Samsung (1), Huawei (1) and Twitter (1). Furthermore, there are some computer vision startups worked with Fashion-MNIST and published very interesting results.

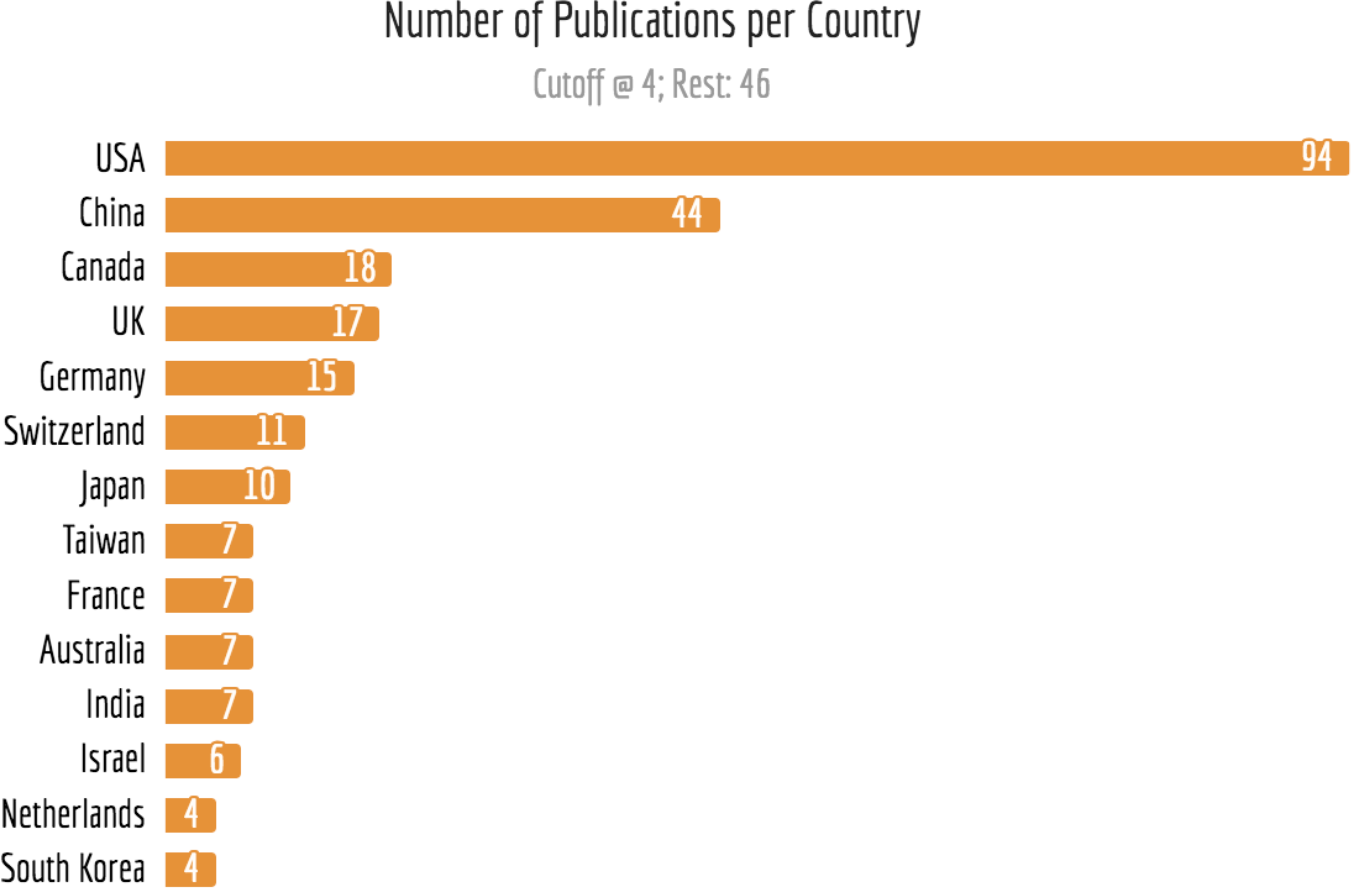

USA and China Dominate the Publications

If we group the publications by country, then we get the following results. Again, if one publication is co-authored by multiple countries, I add one for each of them. A cut-off is made at 4. The complete list of countries can be found here.

USA is taking the absolute majority with 94 unique publications, followed by China with 44. Researchers from Canada, UK and Germany also show strong interests in Fashion-MNIST. These five countries contributes ~50% of the publications. Overall, researchers from 38 different countries have used Fashion-MNIST in their publications.

Despite the ongoing trade war and AI competition between US and China, these two countries have quite some collaboration in AI research. Over a year, they have co-authored 10 publications with Fashion-MNIST, more than any other countries. The next pair is UK and Germany, with 4 co-authored publications. The complete list of country-country collaboration can be found here.

Politics aside, I’d love to see more and more collaboration between countries. Especially with AI technology outracing institutions, cooperation between countries will be crucial.

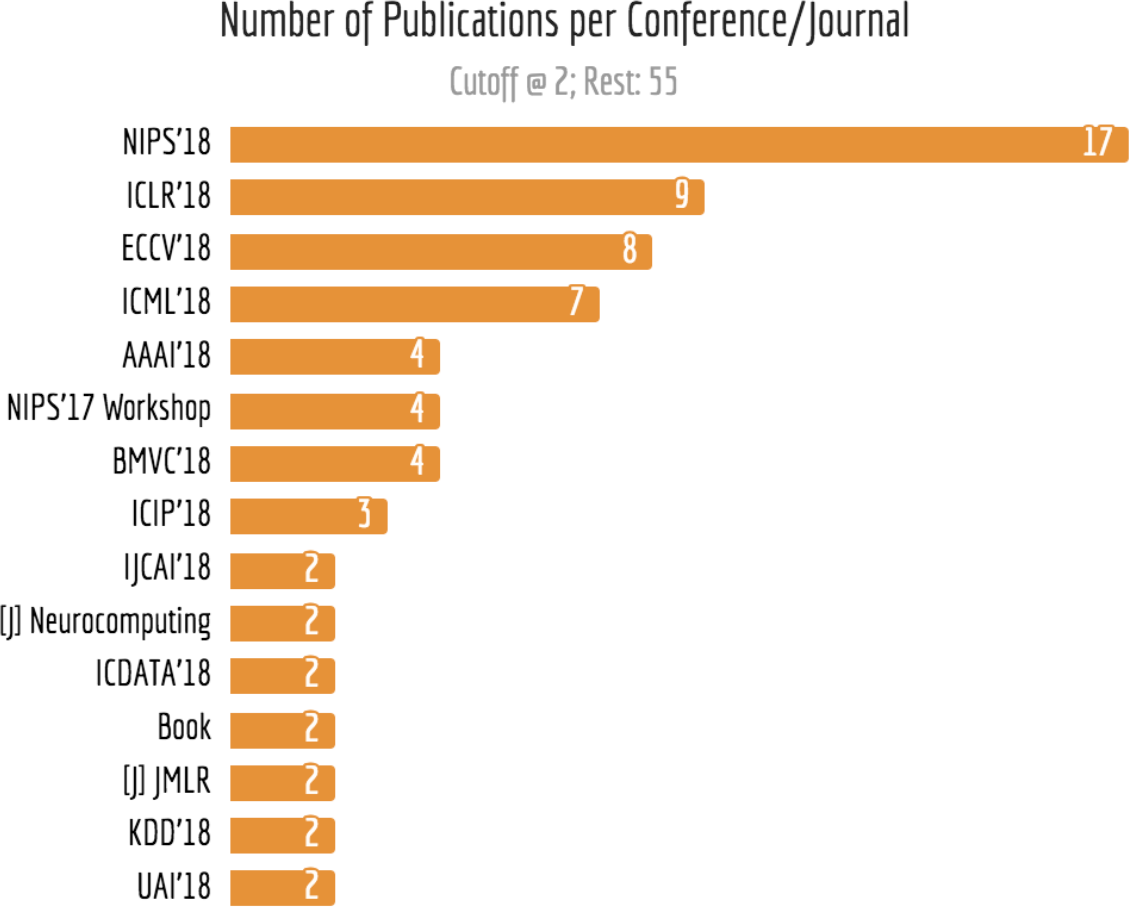

Top-tier Conferences Love Fashion-MNIST

Of course, publications are not measured by the quantity but by the quality. So where are these papers published? Are they good? The next figure gives you an idea about it. Note, only accepted papers are counted. Papers that under review of some conference/journal are not included in this list. The complete list of conferences/jounals can be found here.

Most of the papers are published in this year (2018). One can find top-tier conferences such as NIPS, ICLR, ICML, AAAI, ECCV from the list. In NIPS 2018, there are 17 accepted papers using Fashion-MNIST in the experiment. This number is zero for NIPS 2017, which makes totally sense as Fashion-MNIST was not exist by the submission deadline in May 2017. But for NIPS workshops 2017, of which the deadline are in Nov., we saw researchers using Fashion-MNIST in their papers already.

Besides the conference publications, there are also some journal publications using Fashion-MNIST, including Journal of Machine Learning Research (2), Neurocomputing (2), Nature Communications (1), Science (1) and other high impact journals.

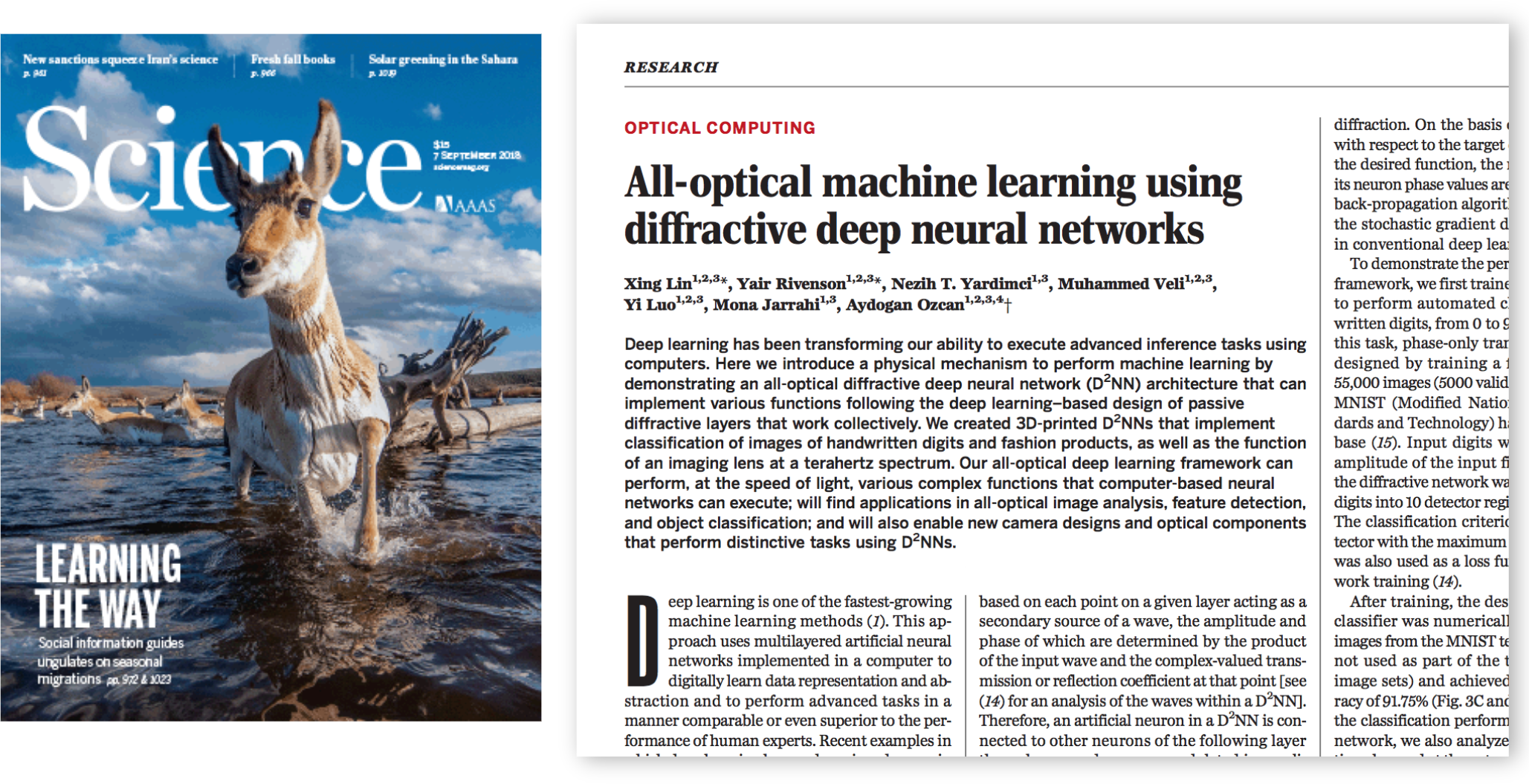

Fashion-MNIST is on Science!

That’s right, the Science, from AAAS.

In the paper “All-optical machine learning using diffractive deep neural networks”, a research team from UCLA builds an all-optical diffractive deep neural network architecture and 3D-printed it. So it can classify fashion images at the speed of light!

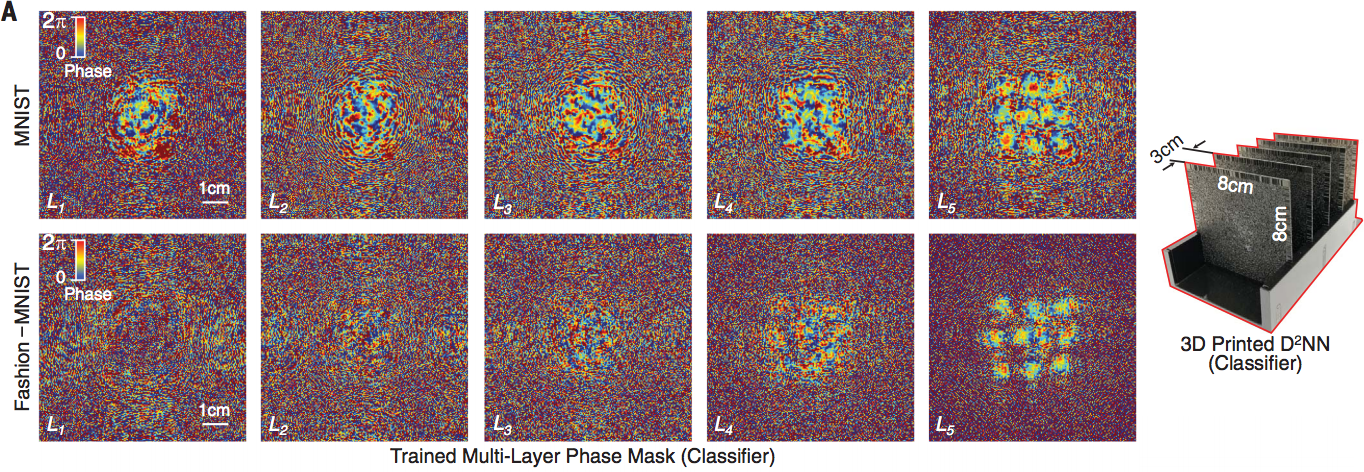

The next figure shows five different trained layers of MNIST and Fashion-MNIST, respectively. These layers can be physically created, where each point on a given layer either transmits or reflects the incoming wave, representing an artificial neuron that is connected to other neurons of the following layers through optical diffraction. On the right side, an illustration of the corresponding 3D-printed all-optical network is shown.

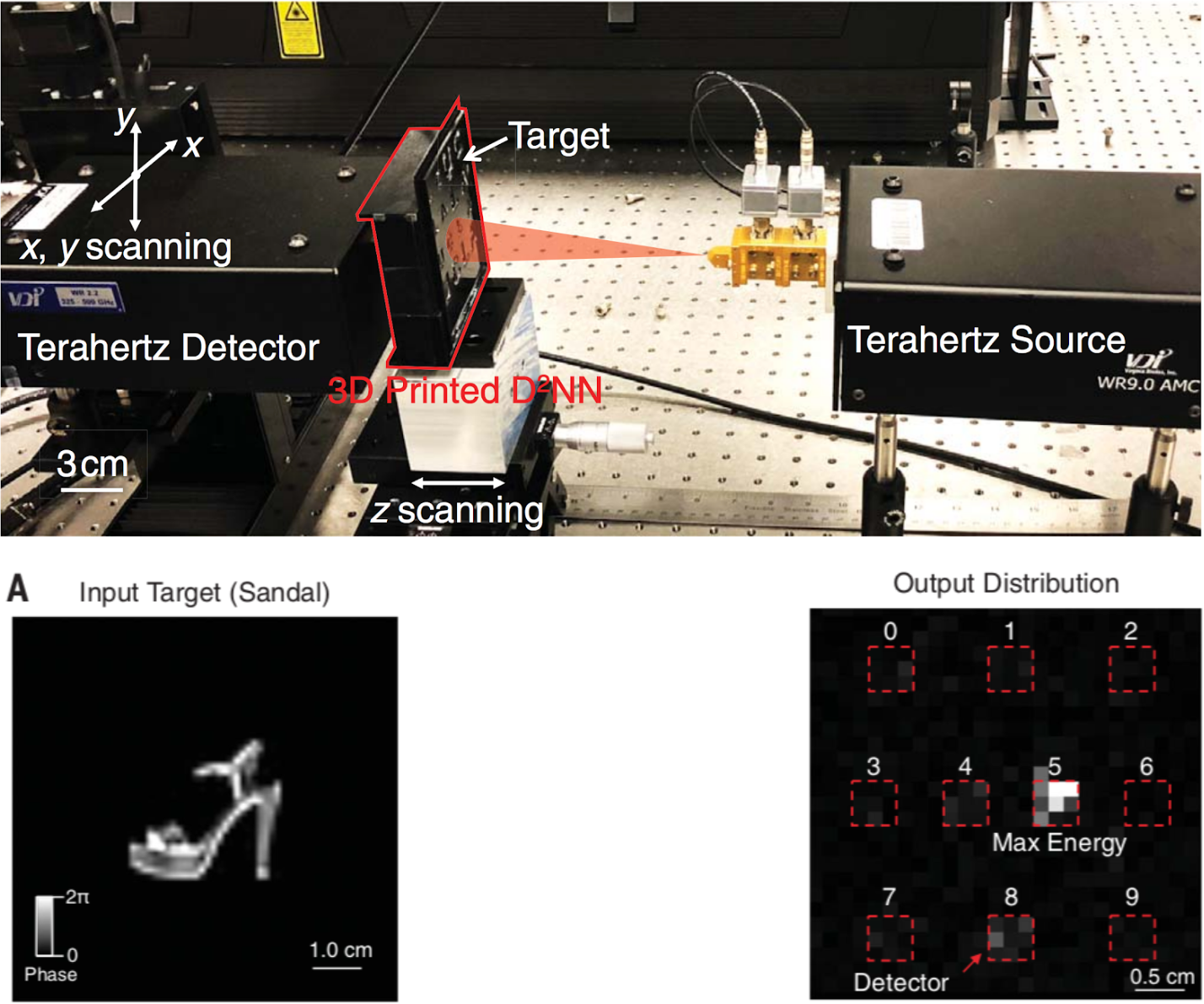

During testing, the 3D-printed network is placed in the environment below. The classification criterion is to find the detector with the maximum optical signal.

GAN Researchers love Fashion-MNIST

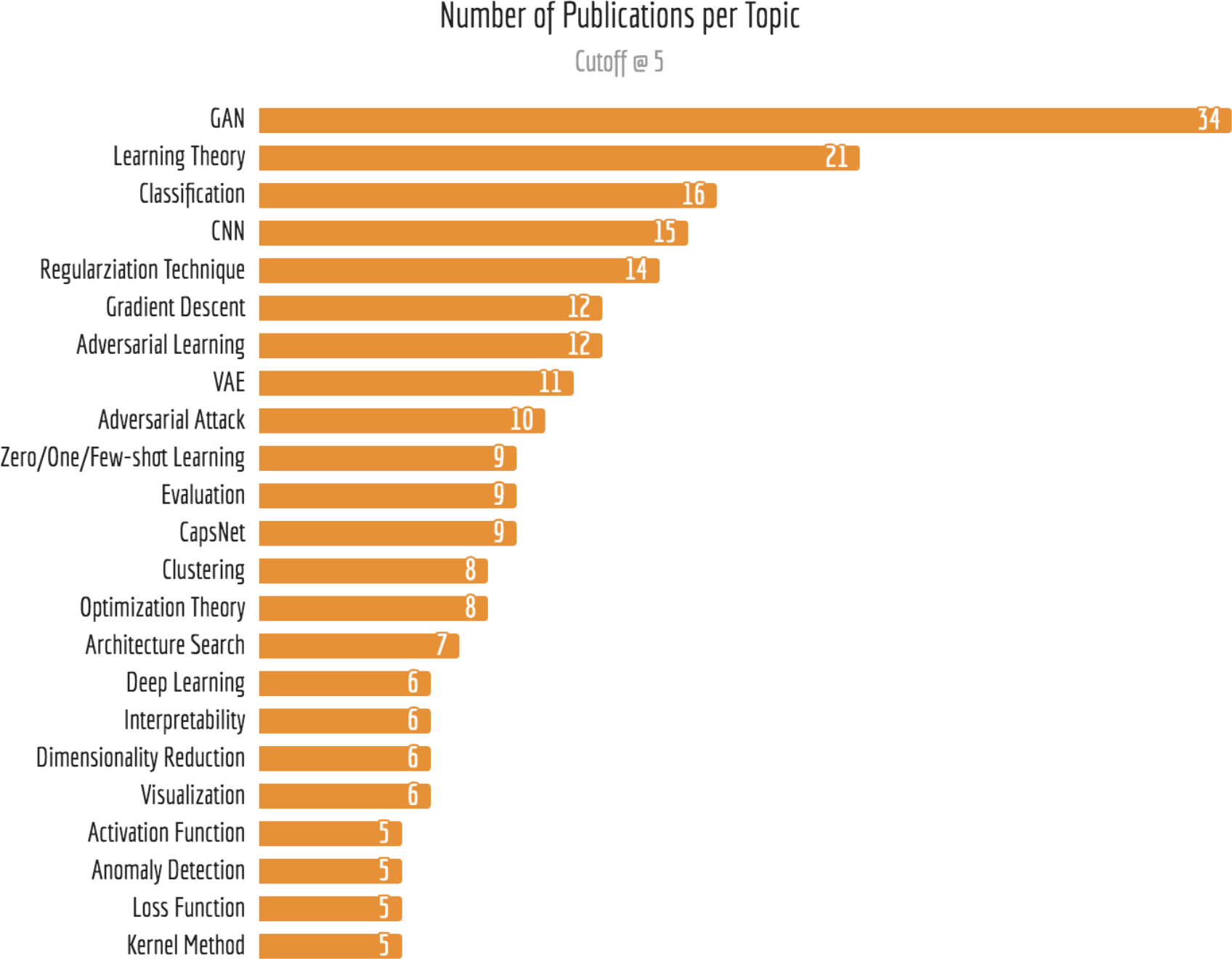

Generative adversarial networks (GANs) have been at the forefront of research on deep neural networks in the last couple of years. GANs have been used for image generation, image processing and many other applications, often leading to state of the art results. It is not surprising that GAN researchers would love Fashion-MNIST dataset: it is light and small; no extra data loader is needed; more complicated and diverse patterns comparing to MNIST, it is an ideal dataset for validating a quick hack. The next figure shows the publications grouped by keywords. For publications without provided keywords, I read through the abstract and related work section and then extract few keywords manually. A cut-off is made at 5. A full list of keywords can be found here.

To Fashion-MNIST, novel learning algorithms and regularization techniques are also heat topics, where researchers often do sanity-check first on standard datasets. Take Capsule Networks for example, ever since it is published, there is much discussion about whether it could actually work as intended. People question the design decisions made in making CapsNets work on MNIST. Does it generalize to other dataset? Forget about ImageNet, does it work on Fashion-MNIST (same size, same format as MNIST)? Over a year, there has been 9 new publications of CapsNet using Fashion-MNIST in the experiment. They proposed novel routing algorithms to encode the spatial relationships more robustly.

Since 2018, architecture search has gained quite a bit of popularity. The problem arises from the topology selection of neural networks, which has always been a difficult manual task for both researchers and practitioners. Google recently started to offer AutoML as a part of its cloud service for automated machine learning. It can design models that achieve accuracies on par with state-of-art models designed by machine learning experts. For researchers who study the architecture search, they can experiment the algorithms more exhaustively on standard dataset such as Fashion-MNIST. One can also confirm this trend by looking at the number of architecture search publications using Fashion-MNIST.

In Community

Few weeks after I published the Fashion-MNIST, I was invited to Amazon Berlin and gave a talk about it. During the QA session, one guy asked me if Fashion-MNIST would just wind up as an excuse for ML researchers who don’t want to work on real-world problems. After all, they can now argue that the model has been “double”-checked on two MNISTs.

The AI community never lets me down. Those high quality publications already speak for themselves. But besides the hard-core research, this community has found another good use of Fashion-MNIST: teaching. Nowadays, you can find thousands of tutorials, videos, slides, online courses, meetups, seminars, workshops using Fashion-MNIST in Machine Learning 101. Fashion-MNIST also improves the diversity of the community by attracting more young female students, enthusiasts, artists and designers. They find this dataset “cute” as the first impression, and therefore feel more engaged when learning ML/AI. In Sept. 2018 during Google Developer Days in China, speakers from Google used Fashion-MNIST in a Keras tutorial for introducing machine learning to hundreds of participants.

Summary

Making progress in the ML/AI field requires joint and consistent efforts from the whole community. I’m really glad that Fashion-MNIST continuously contributes to the community in its own way: it improves the diversity of the community by attracting broader enthusiasts; it encourages researchers to design unequivocal experiments to understand algorithms better. In the future, I will continue maintaining Fashion-MNIST, making it accessible to more people around the globe. It doesn’t matter whether you are a researcher, a student, a professor or an enthusiast. You are welcome to use Fashion-MNIST in papers, meetups, hackathons, tutorials, classrooms or even on T-shirt (God I should add this image to the dataset back in the day, MNISTception!). Sharing is caring, meanwhile happy hacking!

Papers mentioned in this post can be found in this Google Spreadsheet.